The world’s largest source of information is likely found on the Internet. Collecting and analyzing data from websites has vast potential applications in a wide range of fields, including data science, corporate intelligence, and investigative reporting.

Data scientists are constantly looking for new information and data to modify and analyze. Scraping the internet for specific information is currently one of the most popular methods for doing so.

Are you prepared for your first web scraping experience? But first, you must comprehend what web scraping actually is and some of its fundamentals, and then we will talk about the best web scraping techniques.

What is Web Scraping?

The technique of gathering and processing raw data from the Web is known as web scraping, and the Python community has developed some rather potent web scraping tools. A data pipeline is used to process and store this data in a structured manner.

Web scraping is a common practice today with numerous applications:

- Marketing and sales businesses can gather lead-related data by using web scraping.

- Real estate companies can obtain information on new developments, for-sale properties, etc. by using web scraping.

- Price comparison websites like Trivago frequently employ web scraping to get product and pricing data from different e-commerce websites.

You can scrape the web using a variety of programming languages, and each programming language has a variety of libraries that can help you accomplish the same thing. One of the most popular, trusted, and legit programs used for effective web scraping is Python.

About Python

Python is the most popular language for scraping developed and launched in 1991. This programming language is frequently used for creating websites, writing code, creating software, creating system scripts, and other things. The program is a cornerstone of the online sector and is widely used in commerce around the world.

Web applications can be developed on a server using Python. It can be used in conjunction with applications to build processes and link to database systems. Files can also be read and changed by it.

It can also be used to manage massive data, carry out complicated math operations, speed up the prototype process, or create software that is ready for production.

How can you use Python for web scraping?

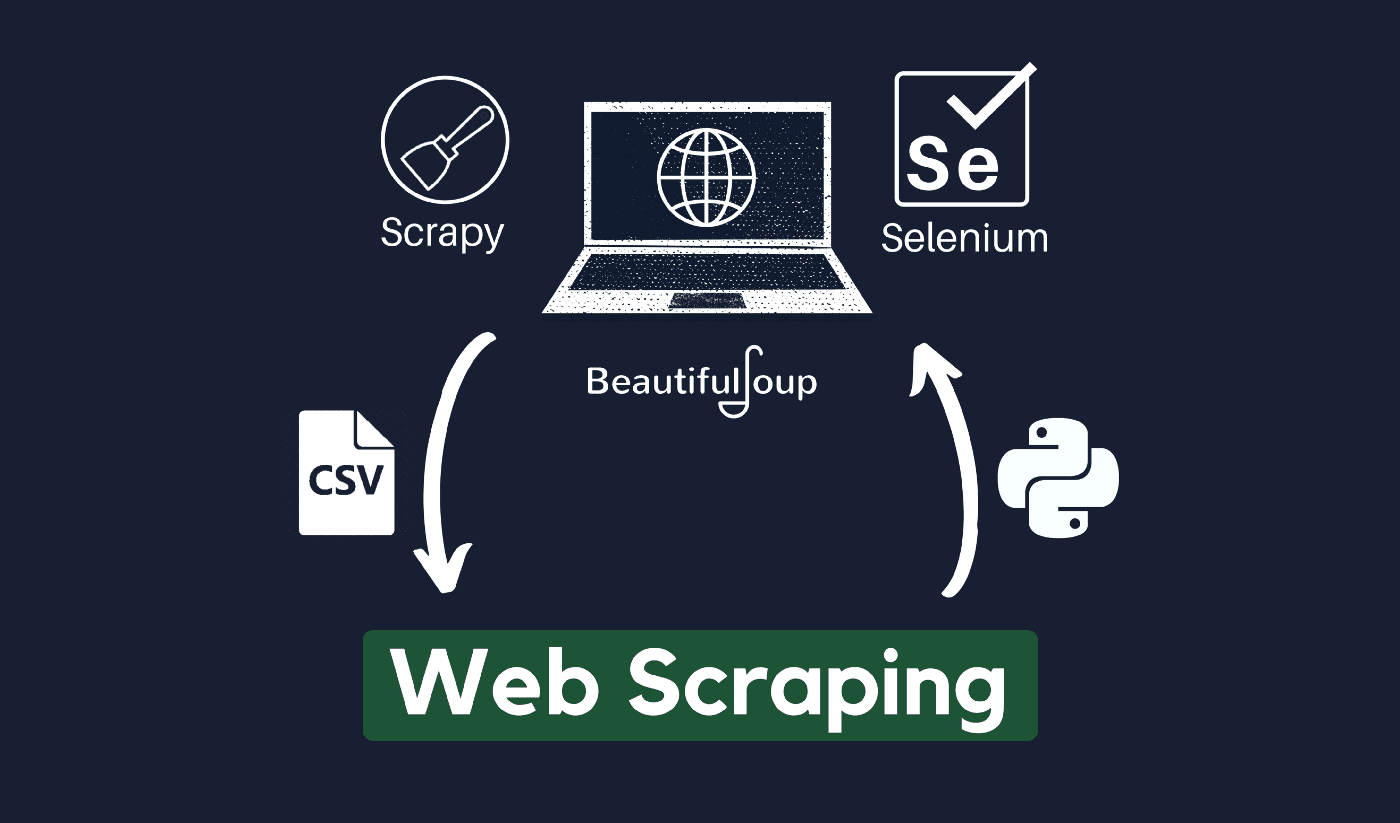

You’ll likely need to go through three steps in order to scrape and extract any information from the internet: obtaining HTML, getting the HTML tree, and finally extracting the information from the tree.

It is possible to retrieve HTML code from a given Site using the Requests library. The HTML tree will then be parsed and extracted using BeautifulSoup, and the data may then be organized using only Python.

It is always advisable to check your target website’s acceptable use policy to see if accessing the website using automated tools is a violation of its conditions of use before using your Python talents for web scraping.

How does web scraping work?

Spiders are typically used in the online scraping process. They retrieve HTML documents from relevant websites, extract the necessary content based on business logic, and then store it in a certain format.

This website serves as a guide for creating highly scalable scrappers.

Python frameworks and approaches combined with a few code snippets can be used to scrape data in a number of straightforward ways. There are several guides available that may help you put the same into practice.

Scraping a single page is simple, but managing the spider code, gathering data, and upkeep of a data warehouse is difficult when scraping millions of pages. To make scraping simple and precise, we’ll examine these problems and their fixes.

Quick links:

**Additional Tip: Use rotating IPs and Proxy Services

As you have clearly got the picture, web scraping allows you to gather information from the web using a set of programming commands. But as you must be aware, your web scraping activities can be traced through your IP address.

This won’t be much of an issue if the data you are scraping it from a public domain. But if you are scraping private data from say, a special media site, then you may land into trouble if your IP address is tracked down.

So, basically, to prevent your spider from being blacklisted, it is always preferable to use proxy services and change IP addresses.

By no means are we encouraging you to use web scraping for gathering any illegal or private data, or indulging in some malicious spyware activities?

But if you are gathering data that might be private, it is recommended to mask or rotate your IP address or use a proxy server to avoid getting traced.

You may also like to read:

Is web scraping legal?

Officially, it is nowhere stated in the internet norms and guidelines that web scraping is illegal. In all fairness, web scraping is totally legal to do, provided you are working on public data.

In late January 2020, it was announced that scraping publicly available data for non-commercial purposes was entirely allowed.

Information that is freely accessible to the general public is data that is accessible to everyone online without a password or other authentication. So, information that is publicly available includes that which may be found on Wikipedia, social media, or Google search results.

However, some websites explicitly forbid users from scraping their data with web scraping. Scraping data from social media is sometimes considered illegal.

The reason for this is that some of it aren’t accessible to the general public, such as when a user makes their information private. In this instance, scraping this information is prohibited. Scraping information from websites without the owner’s consent can also be considered harmful.

Get the best out of the web through Web Scraping!

Collecting and analyzing data from websites has vast potential applications in a wide range of fields, including data science, corporate intelligence, and investigative reporting.

One of the fundamental abilities a data scientist requires is web scraping.

Keep in mind that not everyone will want you to access their web servers for data. Before beginning to scrape a website, make sure you have read the Conditions of Use. Also, be considerate when timing your web queries to avoid overwhelming a server.

Quick Links